_(cropped).jpg/500px-Charlie_Kirk_(54670961811)_(cropped).jpg) |

Charlie must have lived it up to look wise beyond his 30 years. RIP. |

|

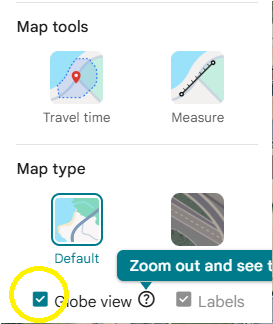

| You have to enable Globe view in Google maps to be able to rotate the map, FYI |

1) Define the mission & constraints

-

Primary goal: early warning of likely threats seen from above (e.g., person prone on a rooftop, arms extended in a firing posture).

-

Environment: dense crowd, variable lighting, lots of false-positive look-alikes (photographers kneeling, people on balconies, HVAC techs on roofs, etc.).

-

Responsibility: the system should assist trained security; it must not be your only line of defense.

Read more

2) Legal/operational guardrails (US-centric)

-

Operations over people: Flying over crowds is tightly regulated. Part 107 allows “operations over people” only if the aircraft meets Category 1–4 requirements (energy limits, declared compliance, etc.) or via specific waivers—and you’ll still need airspace authorizations where applicable. Plan this first with a Part 107 certificated pilot/ops team. Federal Aviation Administration+1

-

Remote ID is now generally required for registered drones; compliance is enforced. Ag & Natural Resources College+3Federal Aviation Administration+3Federal Aviation Administration+3

-

Local law & privacy: State and city rules vary; event surveillance can trigger additional restrictions and disclosure duties. Coordinate with venue, local authorities, and counsel. The Washington Post+3Facit Data Systems+3Brookings+3

-

Ops reality: Many public-safety teams use drones, but policies, geofencing behavior, and public expectations keep shifting—plan for scrutiny and transparency. The Washington Post+1

3) What CV can (and can’t) do today

-

Firearm/long-gun detection: Modern one-stage detectors (e.g., YOLO-family) can flag weapons in clear, near-field views with decent precision, but accuracy drops at long range, low resolution, odd angles, occlusions, and harsh lighting. Peer-reviewed work continues to report promising lab/curated results, but “in the wild,” real-time performance often degrades. Expect both false negatives and false positives. arXiv+2Nature+2

-

Pose cues: Combining person detection + pose estimation (arms extended toward a line-of-sight) can cut false alarms compared to weapon-only detection, but remains fragile when the subject is small in frame or partially hidden. Use pose as a signal, not sole proof. arXiv+2ScienceDirect+2

-

Prone/rooftop heuristics: Recognizing “prone on rooftop” is usually a scene understanding problem: detect people, segment rooftops/edges, infer posture. It’s feasible, but you need careful thresholds and human review.

4) System architecture (safe, high-level)

A. Airframe & optics

-

Multirotor with Category 1–4 compliance (as applicable), low mass (safer over people), redundant link, and a gimbal.

-

Optics: stabilized zoom (20–40× equivalent) for distant roofs; consider dual-sensor (RGB + thermal) to help at dusk/night and to pick out prone bodies against hot rooftops. (Thermal reduces but doesn’t eliminate false alarms.)

B. Compute & link

-

Edge compute (e.g., compact GPU module on the drone or tethered ground unit) to run CV at ≥20–30 FPS on 1080p crops.

-

Downlink: robust, low-latency digital video to a ground safety cell (monitors + human operator) who confirm/triage alerts.

C. Perception pipeline

-

Stage 1: Region-of-interest (ROI) focus (rooftops, balconies, elevated perimeters) via geofenced polygons on a pre-surveyed map.

-

Stage 2: Person detection (find humans).

-

Stage 3: Posture/pose cues (prone, arms extended).

-

Stage 4: Object cues (long-gun/handgun–like shapes).

-

Stage 5: Multi-frame confirmation (require consistent evidence over N frames + small camera re-angle to reduce single-frame artifacts).

-

Stage 6: Human-in-the-loop validation before alarming the principal.

D. Alerting

-

Integrate with a vetted haptics pattern on a secure device (distinct long buzz + 3 beeps is fine), and a separate channel to the protective detail (radio/dispatch). The alert should include a thumbnail + map bearing for immediate action.

5) Reliability engineering & UX

-

Pre-event site survey: map vantage points, list legitimate rooftop workers/positions, and mark friendly cameras/press stands to reduce false positives.

-

Test under event-like conditions: distance, glare, crowd clutter, similar objects (tripods, monopods, umbrellas, microphones).

-

Latency budget: detection → human check → alert must be seconds, not tens of seconds.

-

Fail-safe behavior: What happens if the drone loses link, drifts, or needs an emergency descent? You need standard operating procedures.

-

Data policy: retention limits, on-device blurring outside ROIs if possible; coordinate with counsel before recording attendees at scale. Facit Data Systems+1

6) Team & process

-

People: Part 107 RPIC + visual observers; a CV engineer; security lead to own rules of engagement; legal/compliance owner.

-

Training: operators practice manual confirm—e.g., auto-bookmark suspected frame, yaw/zoom for a second angle before escalating.

-

Coordination: notify venue and local PD in advance; integrate with their comms plan. Public-safety drone programs are common now, but transparency matters. The Washington Post

7) Build phases (suggested)

-

Paper design & legal check: pick a drone class that can legally operate near/over people under Part 107 categories or secure a plan for protected perimeters that avoid over-people flight; confirm Remote ID and airspace. Federal Aviation Administration+1

-

Bench CV prototype: run person+pose+weapon detectors on archived rooftop footage to gauge real-world precision/recall at your expected distances. Expect to tune thresholds heavily. arXiv+1

-

Dry-run with actors on an empty venue (actors mimic benign vs. suspicious postures). Measure latency and false alarms.

-

Supervised pilot at a small event with clear perimeters, robust signage, and explicit notification to attendees.

-

Scale cautiously: add thermal, better zoom, improved tracking, and logging for post-event model tuning (within your privacy policy).

8) What to expect difficulty-wise

-

Hard: sustained accuracy at long range on moving platforms; permissions to fly near/over crowds; keeping false alerts low enough that you still trust the system; staffing a human-in-the-loop 100% of the time.

-

Moderate: getting a stable airborne platform with zoom and a good downlink; building a usable alerting UX.

-

Straightforward: making the phone buzz the way you want once an operator confirms an alert.

Bottom line

-

Technically plausible as an assistive layer, not a silver bullet.

-

Treat it as decision support for trained security, with rigorous legal compliance and privacy safeguards.

-

Start with non-over-crowd perimeters and human-verified alerts, then iterate.

Minimal Pilot Plan

1. Objectives

-

Primary test: Can a drone-mounted camera + CV pipeline consistently flag a prone person or extended-arm posture on a rooftop at realistic distances?

-

Secondary test: Can alerts reach you (or a designated operator) with low latency and low false-positive rates?

-

Exclusions: no crowd overflight, no live weapons, no public deployment.

Read more

2. Equipment

-

Drone:

-

A sub-250 g category quadcopter (e.g., DJI Mini series) – avoids complex “flying over people” restrictions in early tests.

-

Gimbal with at least 3× optical zoom (or high-resolution sensor for digital crops).

-

-

Compute:

-

Laptop or small edge device (Jetson Xavier, Coral Dev Board) for running detection models.

-

Ground link (standard controller feed; save video to feed model in real time).

-

-

Models:

-

Pretrained person detection (e.g., YOLOv8).

-

Pose estimation (arms extended, prone).

-

Optional: off-the-shelf weapon-detection model (expect limited reliability, but useful for testing).

-

-

Alerting:

-

Simple phone app or script that buzzes your phone when an operator “confirms” a detection.

-

3. Test Script

-

Site survey: pick a safe, empty building or rooftop environment with clear line of sight.

-

Actors: have volunteers mimic scenarios:

-

Standing casually.

-

Kneeling (like photographers).

-

Lying prone (no weapon).

-

Lying prone with a prop (e.g., tripod, broom).

-

Standing with arms extended (tripod vs. mock rifle silhouette).

-

-

Flight profile:

-

Hover 50–100 m away, point camera at roof.

-

Record continuous footage, run detection pipeline live.

-

-

Data capture:

-

Log true positives, false positives, false negatives.

-

Time from “action begins” to “alert received.”

-

-

Alert loop:

-

Operator sees flagged frame, decides if it’s worth escalating, then taps “confirm.”

-

You (or test recipient) receive distinct buzz pattern on phone.

-

4. Evaluation Metrics

-

Detection accuracy: % of correct detections at >50 m.

-

False positives: e.g., prone sunbather, tripod mistaken for gun.

-

Latency: camera → detection → operator confirm → phone buzz (target ≤5 sec).

-

Operator load: how often they’re overwhelmed by spurious alerts.

5. Phased Progression

-

Phase 0 (bench): run models on stored rooftop footage.

-

Phase 1 (field, empty site): actors on roof, drone hovering, no public exposure.

-

Phase 2 (controlled event): small gathering with perimeter security, drone watches rooftops only, alerts go to operator—not to you directly.

-

Phase 3 (scaling): if results are promising, consider heavier drone with thermal + zoom, legal approvals for operations near people, and integration into a full security workflow.

6. Safety & Legal Guardrails

-

Stay sub-250 g for pilot tests to simplify compliance.

-

Don’t fly over uninvolved people.

-

No real weapons—use safe props.

-

Treat video as sensitive: purge after testing unless needed for training.

-

Always have a licensed Part 107 pilot if scaling beyond hobby/research use.

✅ By the end of this pilot you’ll know:

-

Whether CV models can actually spot “prone with long object” at useful distances.

-

How often false alarms overwhelm the system.

-

Whether the alerting loop is practical under real conditions.

No comments:

Post a Comment